Tutorial: Building a headless decisioning scenario with data flows in Pega 7.2.1

In this tutorial, you learn how to create and configure data flows that you can use to build a headless decisioning scenario on the Pega 7 Platform. You also get a description of the configuration details of data flows in this scenario, and links to additional resources about data flows and data sets.

- Prerequisites

- Creating an instance of the Data Flow rule called DecisionFlow

- Creating an instance of the Data Flow rule called ResponseFlow

- Configuration details of data flows

- Next steps

Prerequisites

Create NextBestAction and ResponseStrategy strategies.

For details on how to create strategies, see the PDN article Tutorial: Creating decision strategies for a headless decisioning scenario.

Create a CustomerTable data set of type Database Table in the DMOrg-DMSample-Data-Customer class. This data set contains all of the information associated with the users who log into the website. When the data is merged, it provides a full record about a website user who logged into the website.

For details on how to create data sets, see the About Data Set rules help topic.

Creating an instance of the Data Flow rule called DecisionFlow

Create an instance of the Data Flow rule that uses the NextBestAction strategy to select banners for a customer who logs in to the website.

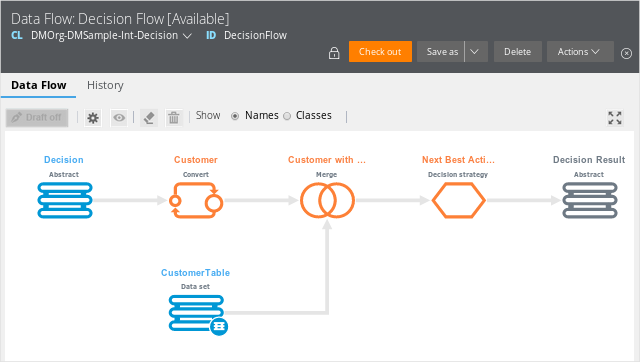

DecisionFlow Data Flow rule

- In the Application Explorer, right-click the DMOrg-DMSample-Int- class and select > > .

- Define the record configuration of the data flow.

- In the Label field, enter Decision Flow.

- Ensure that the Apply to field contains the class where you want to save the data flow (DMOrg-DMSample-Int-Decision).

- Click .

- Navigate to the shape, click the add icon on the right, and select .

- Open the component.

- In the , select the DMOrg-DMSample-Data-Customer class.

- Click .

- In the field, select .CustomerID.

- In the field, select .CustomerID.

- Click .

- Navigate to the shape, click the add icon on the right, and select . Notice that a secondary source component appeared.

- Open the secondary source component.

- In the field, select .

- Select the CustomerTable data set in the DMOrg-DMSample-Data-Customer input class.

- Click .

- Open the component and specify the merge conditions.

- In the field, select .CustomerID.

- In the field, select .CustomerID.

- Click .

- Navigate to the shape, click the add icon on the right, and select .

- Configure the component.

Properties panel of the Strategy component in the Data Flow rule form

- In the field, select NextBestAction.

- Select the option.

- Store adaptive inputs and strategy results for 1 day.

- In the section, select the option.

- In the field, select the DMOrg-DMSample-Data-DecisionResult class.

- Click to map the properties between the target class (DMOrg-DMSample-Data-DecisionResult) and the source class (DMOrg-DMSample-SR):

- .DecisionResults to All results

- .CustomerID to Subject ID

- .InteractionID to InteractionID

- Click .

- Click .

Creating an instance of the Data Flow rule called ResponseFlow

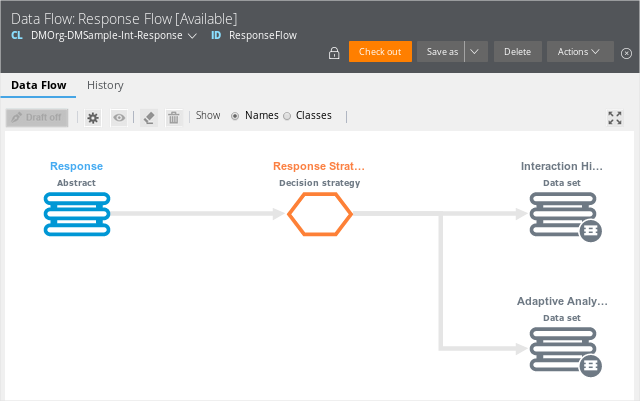

Create an instance of the Data Flow rule that writes the outcome of decisions results to Interaction History (the pxnteractionHistory data set) and Adaptive Decision Manager (the pxAdaptiveAnalytics data set). You can use Interaction History records results for reporting and future decisions. The second data set records data that is necessary to update the adaptive data store and refine adaptive models.

ResponseFlow Data Flow rule

- In the Application Explorer, right-click the DMOrg-DMSample-Int-Response class and select > > .

- Define the record configuration of the data flow.

- In the Label field, enter Response Flow.

- Ensure that the Apply to field contains the class where you want to save the data flow (DMOrg-DMSample-Int-Response).

- Click .

- Navigate to the shape, click the add icon on the right, and select .

Open the component.

Properties panel of the Strategy component in the Data Flow rule form

- In the field, select ResponseStrategy.

- Select the option.

- Select the option and enter .InteractionID in the field.

- In the section, select the option.

- Click .

- Open the shape.

- In the field, select .

- In the field, select InteractionHistory data set.

- Click .

- Navigate to the shape and click the add icon on the bottom.

- Open the second destination shape.

- In the field, select .

- In the field, select AdaptiveAnalytics.

- Click .

- Click .

Once you create all of the rules and configure them, the headless decisioning scenario is ready to use.

Configuration details of the data flows

Types of data flows

There are three types of data flow that you can create on the Pega 7 Platform, depending on the primary input they have:

Batch data flows - Data flows of this type use a non-Stream data set type (Database Table, Decision Data Store, HBase Data Set, HDFS data set)or report definition as the primary input of data.

Real time data flows - Data flows of this type use a Stream data set type as the primary input of data.

Abstract - Data flows of this type use an abstract set of rules instead of primary input. You select Abstract in the Source component of the data flow when you do not know where the data input comes from. The moment the data flow is invoked, for example by an activity, the activity provides the data input.

Data flow components

Source and Destination

The ResponseFlow data flow has an Abstract primary input and output. This means that the mechanism that triggers the data flow needs to provide input data and another mechanism needs to pick up decision results and send them to the channel application. The primary input provides a page of type DMOrg-DMSample-Int-Decisionthat contains the .CustomerID property. The ResponseFlow data flow passes the page to the Convert component.

The Source and Destination components are the entry and exit points for data that a data flow moves and processes. For more details about data flow components, see Data Flow rule form - Completing Data Flows.

Convert

Convert allows you to take data from one class and put it in another, overwriting identical properties (by name) or explicitly mapping properties that do not have the same name between source and target. In the tutorial, this component converts data from the DMOrg-DMSample-Int-Decision class to DMOrg-DMSample-Data-Customer.

Merge

In this component, the data flow merges the customer record identified by .CustomerID with the record coming from the secondary source (the CustomerTable data set). To merge the data successfully both records must have the same .CustomerID. In this tutorial the secondary source is a Database Table that contains all of the customers' data.

Make sure that both inputs to the Merge component (output of the Convert and secondary Source component) result in the same class. In this tutorial it is DMOrg-DMSample-Data-Customer.

The output of the Merge component provides the full customer record for the particular .CustomerID that was sent by the channel application. This full record can be used to execute the NextBestAction strategy.

Strategy

Strategy uses a combination of adaptive models and business rules. When strategy is used in a headless decisioning scenario, data flows can be used to manage the cycle of the decision response. In the tutorial the DecisionFlow is the place where the customer is offered three banners and makes a decision whether or not to click one of them.

To record customers' decisions, the ServiceActivity activity triggers the ResponseFlow data flow. Execution of this flow saves the users' decisions (information about which banner was clicked) and the data that was used to compute which three banners should be displayed on the website. The flow references two data sets, one of them stores new data used by the adaptive models, the other one stores customers' interaction history (the decisions they made).

When you reference a strategy in a data flow, it is important to specify the output of the strategy results. You can:

- Output the results individually on the SR class.

- Select the input class of the Strategy component as the output class.

- Create an instance of a completely different class to minimize the amount of data that is sent. For this option, you need to map properties between the classes to retrieve the adaptive payload and decision results.

Data Set types

The data sets referenced in data flows represent data stored in different sources and formats. Once the data flow is invoked, the data from the data source is moved and processed by the data flow's components.

For more information about Data Set types, see Data Set rule form - Completing Data Sets

Next steps

Now that you have prepared all the rule instances that are necessary in the headless decisioning scenario, you can execute the DecisionFlow and ResponseFlow data flows and use the headless decisioning scenario.

Previous topic Monitoring the predictive performance of a model Next topic Tutorial: Configuring a remote repository as a source for a File data set