Tutorial: Using managed data flow runs

Pega Platform™ manages the life cycle of data flow runs that you include in your application. The system keeps the runs active and automatically updates them every time you move the application or make changes to the runs. As a result, you do not have to re-create the runs and manually restart them after you send your changes to a new environment. Managed runs are started or paused automatically based on the availability of the related data flow service.

Managed data flow runs make testing and adjusting data flow runs streamlined, simple, and effective.

Use case

The uPlusTelco company wants to make sure that they take care of their most valuable customers. The company wants to keep their customers engaged by automatically presenting them with new and exciting offers if they did not manage to get through to a CSR with their calls. uPlusTelco prepared a data flow that detects the call, decides the importance of the customer and creates a case that lists eligible offers for priority customers. To gather the necessary data for the data flow, uPlusTelco needs to create a real-time run which can then be continuously adjusted to make sure their process is accurate and relevant. The company makes all changes in a test environment and then moves the application to a production environment where the runs operate.

The use case is based on the following tasks:

- Creating a managed data flow run

- Managing the life cycle of the data flow run

- Managing the life cycle of the application

Creating a managed data flow run

uPlusTelco will use an existing data flow as the basis for a run that collects data in real time. The company wants Pega Platform to manage the run automatically to simplify and speed up the process.

Create and configure your data flow run:

- In Dev Studio, create a data flow run. For more information, see Creating a real-time run for data flows.

- Associate the run with the Detect Dropped Calls data flow. The data flow triggers a case if it detects three dropped calls over a specified timeframe.

The Detect Dropped Calls data flow

- Select Manage the run and include it in the application. By selecting this option, the life cycle of the run becomes managed by the application.

The following video demonstrates the creation of real-time data flow runs:

Managing the life cycle of the data flow run

uPlusTelco wants to keep customizing the run and adjusting the data flow to make sure that the responses are timely and the offers are relevant. Reach those goals by performing the following tasks:

Modifying the event strategy

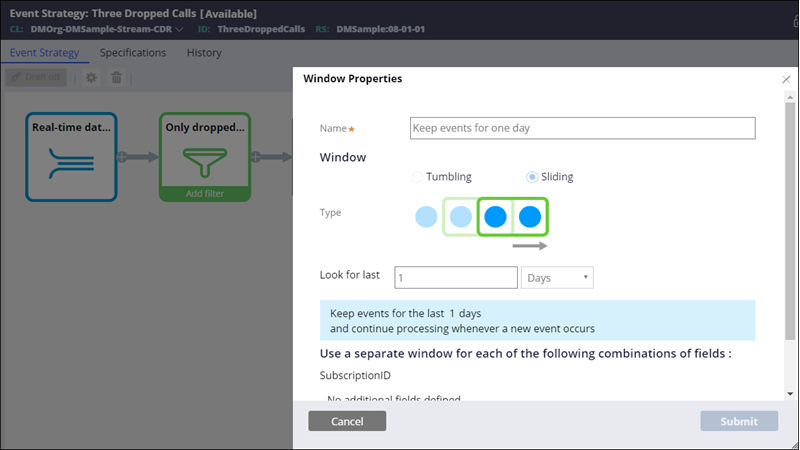

uPlusTelco determines that one day may not always be enough for the data flow to trigger action. The company decides to expand the timeframe from one day to two days. With the timeframe set to one day, the count restarts every day but with the change the frequency increases if the daily count of dropped calls was usually less than three.

Change the time over which the data flow run collects dropped calls:

- Open the Three Dropped Calls event strategy that the Detect Dropped Calls data flow uses.

- On the Event Strategy tab, open the window shape.

The Window shape in the Event Strategy

- Set the number of days to 2 and then click Submit.

The run pauses and then resumes work to implement the changes.

Adding a filter component to the data flow

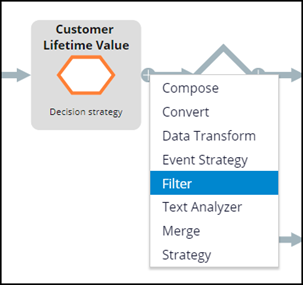

uPlusTelco wants to retain information only about dropped calls from its more valuable customers, whose Customer Lifetime Value (CLV) is at least 1000.

Add a filter to the data flow to limit the amount of data that the data flow processes:

- Open the Detect Dropped Calls data flow.

- On the Customer Lifetime Value strategy component, click the Plus icon.

Adding filters

- Add a filter component with a when condition that only processes data for customers with CLV of 1000 or more. For more information, see Filtering incoming data.

- Save the data flow.

The run pauses and then resumes work to implement the changes.

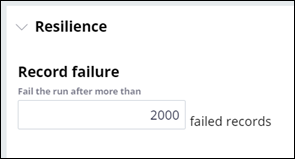

Modifying the error threshold

To make the run less dependent on errors and keep the process uninterrupted, uPlusTelco decides to set the error threshold to 2000.

Adjust the number of failed records after which the run fails and stops:

- Open your managed run.

- On the Details tab, in the Record failure field, change the value to 2000.

The Resilience section

- Save the run.

The run pauses and then resumes work to implement the changes.

Managing the life cycle of the application

To continuously improve the process, in the life cycle of the application, the company will move the ruleset between environments. Customization and testing take place only in the test environment. In the production environment, all changes happen through importing modified rulesets from the test environment. Because the run pauses and restarts when it accommodates to the changes, as a user, you may not be aware that anything happened.

Importing the changes to the production environment

After introducing changes to the data flow and the run, uPlusTelco wants to move the application along with the run to the production environment.

Move your application to the production environment:

- In the production environment, in Dev Studio, click Configure > Application > Distribution > Import.

- Import the RAP file with your ruleset to the production environment. For more information, see Import wizard landing page.

The run pauses and restarts with all the changes you made in the test environment.

Conclusions

You created and configured a data flow run that picks up data in real time and is managed by your application. In the test environment, you made changes to the run and ported it back to see your changes propagated to the production environment. Because you included the run in your application, you did not have to restart it after every change or re-create it when you moved the application to another environment. The application kept the run active thus saving your time and simplifying the entire process.

Previous topic Tutorial: Using event strategy in Pega 7 Platform real-time event processing (7.2.2) Next topic Decision management 8.4 and later