Case archiving and purging overview

Use case archiving to archive and purge cases to improve the performance of your cloud-based instance of Pega Platform and reduce storage costs. Permanently delete data from Pega Cloud File Storage to meet your business data retention policy with Pega Platform expunge functionality. Understand how case archiving works so that you can effectively plan and perform case archiving policy within Pega Platform.

- How is the term "archival process" used in this documentation?

- An archival process is a set of jobs that includes copying, indexing, purging, moving data to PCFS, and permanent deletion using an expunge job.

- How is the term "expunge" used in this documentation?

- Expunge means permanent deletion of archived data from PCFS. Expunge jobs are used to implement your data retention policy.

Case hierarchy requirements for case archiving and purging

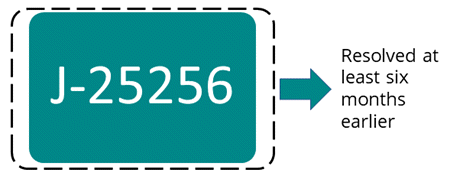

Case hierarchy requirements can be categorized by the following case structures:- Stand-alone case

- A case unit that contains a single case. This type of case contains neither a child nor a parent with a corresponding case type that uses an archival policy. You must set an archival policy on the singular case type to define a stand-alone case policy. Pega Platform can archive or expunge stand-alone cases if the case has been resolved for at least as long as the archival policy.

- Case hierarchy

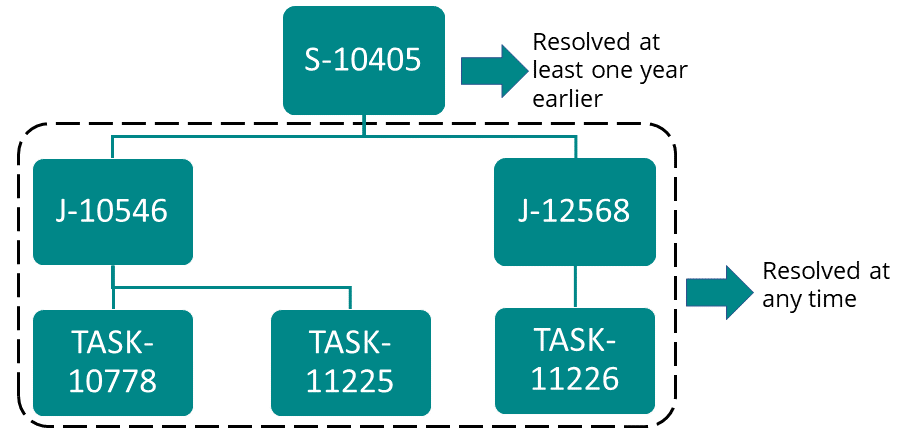

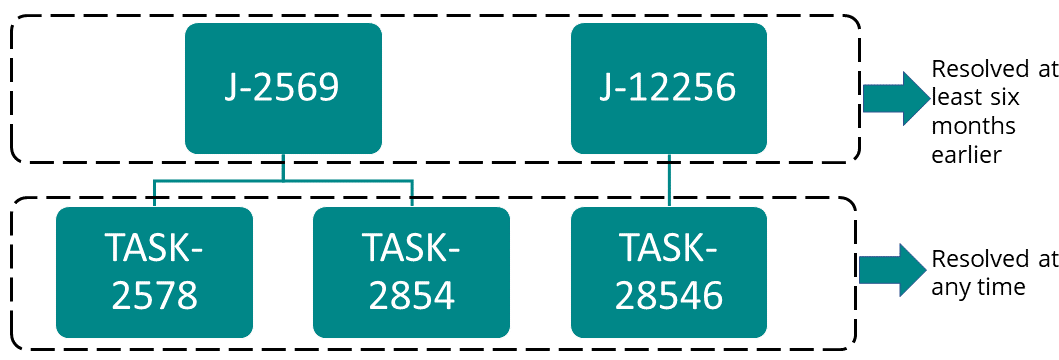

- A case unit that contains parent cases, child cases, or both. Pega Platform performs a case archival policy on the entire

hierarchy. You must set an archival policy for the top-level case. The case

type of the top-level case of the hierarchy determines the archival policy

of the entire hierarchy. Pega Platform can archive all

cases in the hierarchy if they meet the following conditions:

- The top-level case has been resolved for at least as long as its archival policy specifies.

- All sub-level parent and child cases are resolved. Note the

following sub-conditions:

- Sub-level parent and child cases do not need to have an archival policy defined.

- Sub-level parent and child cases can be resolved any time before the archival job.

If the case structure does not meet these conditions, the archival job cannot process that case structure.

Case hierarchy requirements for expunging

- Stand-alone case and case hierarchy

- Both stand-alone cases and case hierarchies that Pega Platform previously archived are permanently deleted from Pega Cloud File Storage after you set the permanent deletion policy for that case. Expunge jobs do not process cases that were not archived.

Artifacts that are archived during an archival process

The archival process can also archives certain artifacts within a case. The following table shows the artifacts that Pega Platform can archive:

Artifacts that are archived in Pega Platform

| Archived artifacts | Non-archived artifacts |

|

|

Case archiving process

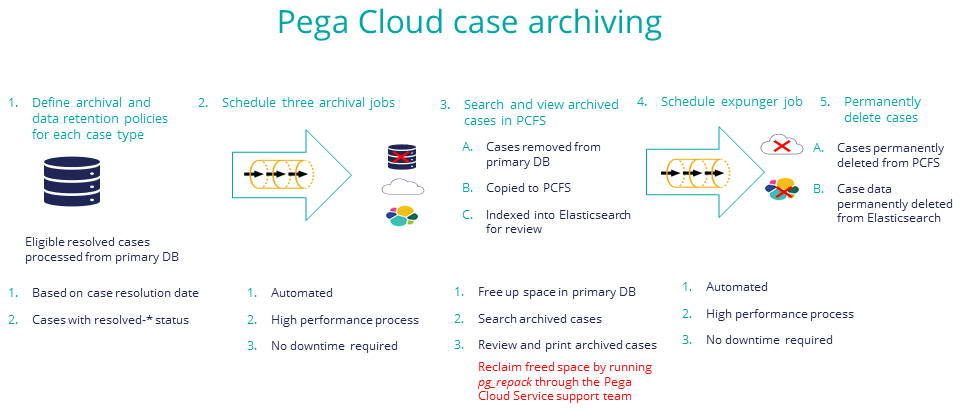

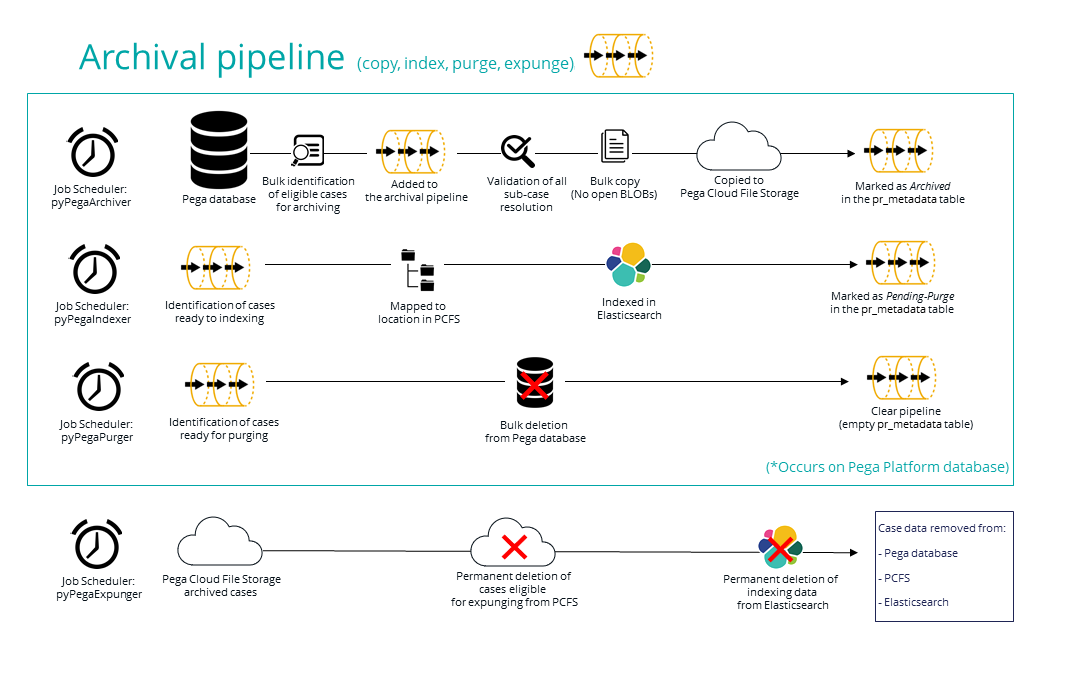

To archive cases, Pega Platform uses different jobs that you set up through Job Schedulers to copy, index, and purge specific artifacts in stand-alone cases and case hierarchies.

Archive and purge jobs and processes

| Job Scheduler | Implementation and description |

| pyPegaArchiver | The pyPegaArchiver Job Scheduler (default short description: Archival_Copier) copies files to Pega Cloud File Storage by using the following steps:

|

| pyPegaIndexer | The pyPegaIndexer Job Scheduler (default short description: Archival_Indexer) indexes the copied files into Elasticsearch. The index keeps the association between an archived case and its archived file in Pega Cloud File Storage. |

| pyPegaPurger | The pyPegaPurger Job Scheduler (default short description: Archival_Purger) deletes cases and their associated data from the primary database. The job also integrates a SQL VACUUM command to process deleted space and reclaim the irrelevant empty database tables. |

| pyPegaExpunger | The pyPegaExpunger (default short description: Archival_Expunger) Job Scheduler permanently deletes archived files from Pega Cloud File Storage and removes the data for the corresponding cases from the Elasticsearch index. |

| Optional: pyArchival_ReIndexer | The pyArchival_ReIndexer (default short description: Archival_ReIndexer) Job Scheduler fixes corrupted Elasticsearch indexes. This job follows a case archival and purge job when trying to fix case archives. |

Previous topic Improving performance by archiving cases Next topic Planning your case archiving and purging process