Figuring out how to properly test the tools we build has always been a challenge due to the number of technologies used to build them. This includes Java, JavaScript, HTML, and CSS. This challenge is made more complex by all the ways our tools can be used and due to the UI testing tools available on the market.

Finding a testing tool that meets all of our needs has also been demanding, and over the years we’ve used a number of different tools for writing test automation. In fact, we started off without test automation. During this time, we tested all of our features manually, which grew to be a rather daunting task given the large amount of features that made up our product. Next, we moved to eggPlant, which uses image recognition to navigate through and test our generated UI. We then moved to Telerik, followed by RSpec; both technologies that do testing by inspecting the Document Object Model (DOM). More recently we’ve been placing an emphasis on writing JUnit tests to evaluate our Java code and making sure those Junit tests are true serverless unit tests. For testing our JavaScript code we’ve been using Jasmine, which is essentially JUnit for JavaScript.

We’ve tried a number of testing tools and we’ve had a few key takeaways around testing our UI because of this.

All testing technologies have their pros and cons

You need to find the ones that work for you. In our case this has been multiple testing technologies. We found that JUnit and Jasmine are great for testing low level logic, but that eggPlant and RSpec were really the best tools for testing the UI that we generate. It’s a combination of these tools that give us the test coverage and confidence in the quality of our product that we need.

It’s important to find the right ratio of tests

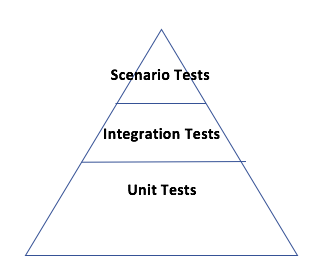

This will ensure the correct code and feature coverage as well as ensure the stability of the automated tests you’re executing when using multiple testing technologies. We try to follow the industry concept of the Test Pyramid across the organization, which is a common metaphor that indicates by proportion what kinds of tests we should be writing.

Scenario tests exercise multiple features together. Most tests written with eggPlant or RSpec fall into this category. These tests tend to be long running and can be unstable compared to true unit tests, so we try to focus on minimizing the number of tests in this category, despite it being the more thorough way to test our UI. Sometimes we end up spending more on maintaining scenario tests than the ROI of these tests finding bugs.

Integration (or functional) tests end up testing features or components together. These can be unstable and can be long running but provide a good amount of coverage.

We write unit tests with JUnit and Jasmine and we use mocking, which involves creating an object that mimics the behavior of a real object and involves testing specific functions in your code. These are small tests that are executed very quickly, and they are almost always very stable, but they don’t provide a good amount of test coverage by themselves.

Choosing where your tests will run

It’s important to think about where in your continuous integration and continuous delivery process you want your tests to run. Is it testing a critical piece of functionality? In that case it should probably be executed whenever a developer merges code. Does the test take a while to execute? Maybe that test should be run at a later point, not at merge time because we need developers to receive instant feedback on the quality of what they’re merging. If a test is testing one-off edge cases or features that are not widely used, then that could be run as part of a larger full regression test suite that might only be run once a day.

Any type of test will miss bugs

On occasion, doing a bit of manual exploratory testing is a good practice. This involves having someone manually try out different features within your product. Exploratory testing that’s done by someone unfamiliar with a particular feature is a good way to point out usability issues or edge cases that you had not thought of previously.

Change for stability…

UI technology changes quickly, so you need to adapt to how you do your testing. This means constantly reflecting on how you do testing and making sure the testing tools you’re using are the right ones for the job. One problem we ran into with DOM-based testing tools was that we intentionally change our DOM very frequently. This meant we constantly needed to rewrite the tests we wrote with the DOM-based testing tools. One of the UI Engineering teams created Test IDs to get around this. These were unique IDs that you could add to your UI elements and were guaranteed not to change. This prevented us from having to re-write tests constantly and provided stability from release to release.

…and cost effectiveness

Be sure to consider the cost of different testing technologies relative to the size of your organization. A testing tool is not very useful if you can afford only a few licenses that must be shared across a large group of engineers.

Our User Interface Engineering teams are made up of full stack developers, creating Pega tools that are used to build your application's UI. It's been quite a progression of testing over the past few years, and we hope this provides insight into our engineering team...as well as tips and tricks for testing. Want to dive in some more? Check out our other platform and tech posts through the links below.